NVIDIA Docker deep dive

What is NVIDIA Docker

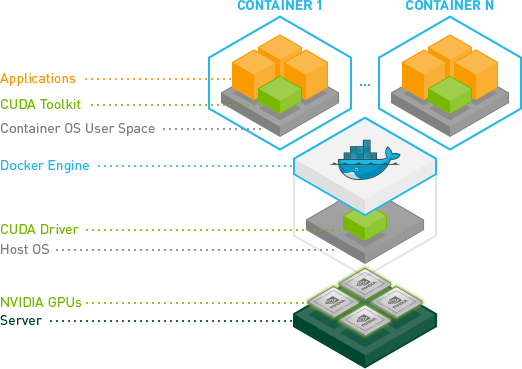

NVIDIA Docker is a project providing GPU support to Docker container. It is the cornerstone of NVIDIA NGC and all container-based AI platforms.

Why NVIDIA Docker is needed

NVIDIA Docker drastically simplifies deployment of GPU based application. Everybody using Linux knows how painful it is to handle NVIDIA driver stack on Linux(remember the famous dirty words Linus Torvalds threw to NVIDIA? ;-P).

With NVIDIA Docker, we can “pass-through” GPU from host to container, thus eliminate the work needed to manually configure GPU inside container.

Installation

In general, we need to install Docker engine, NVIDIA driver and NVIDIA Docker library on host.

See this link for detailed instructions: Installation guide

Usage

Add --gpus option to DockerCLI command when starting a container. The started container will have access to host’s GPUs.

Example:

$docker run --rm --gpus all ubuntu nvidia-smi

#output:

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.51.06 Driver Version: 450.51.06 CUDA Version: N/A |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 GeForce GTX 1060 On | 00000000:01:00.0 Off | N/A |

| N/A 52C P8 6W / N/A | 11MiB / 6078MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

Overview of Docker Stack

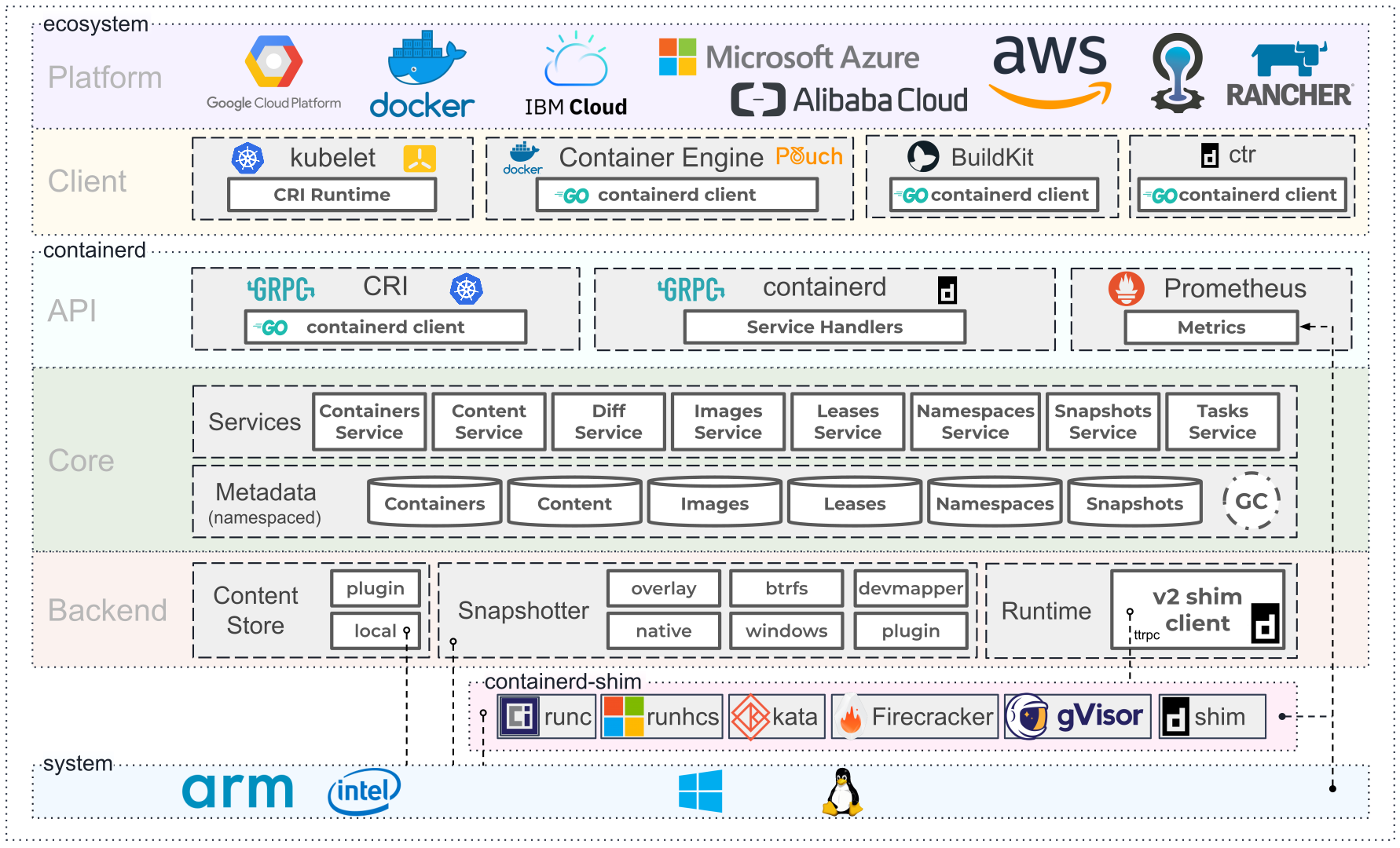

Before diving into mechanism of NVIDIA Docker, let’s have a quick review of what is under the hood of Docker:

Architecture

In general there are 2 layers. Upper layer interacts with user, lower layer handles core logic of container.

DockerCLI/Dockerd

These two components, with client-server pattern, together form the user interface of Docker.

DockerCLI is the client user interacts with Docker. Dockerd is a daemon server listening to client requests and forwarding them to containerd.

containerd

Containerd is the engine of Docker Container. It implements every and only logic about container including container lifecycle management, image management, etc.

runc

runc is a CLI tool for spawning and running containers according to the OCI specification. Containerd uses this tool to actually start a new container.

OCI(Open Container Initiative) runtime-spec

In order to run a container, user must provide runtime-spec(a config.json file) to runc describing the process to be run.

Config file example

{

"ociVersion": "1.0.1",

"process": {

"terminal": true,

"user": {

"uid": 1,

"gid": 1,

},

"args": [

"sh"

],

"env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"TERM=xterm"

],

"cwd": "/",

"capabilities": {

"bounding": [

"CAP_AUDIT_WRITE",

"CAP_KILL",

"CAP_NET_BIND_SERVICE"

],

},

"rlimits": [

{

"type": "RLIMIT_CORE",

"hard": 1024,

"soft": 1024

},

],

},

"root": {

"path": "rootfs",

"readonly": true

},

"mounts": [

{

"destination": "/proc",

"type": "proc",

"source": "proc"

},

],

"hooks": {

"prestart": [

{

"path": "/usr/bin/fix-mounts",

"args": [

"fix-mounts",

"arg1",

"arg2"

],

"env": [

"key1=value1"

]

},

{

"path": "/usr/bin/setup-network"

}

]

}

}

From the example, we can see that:

- Container is nothing but a process. Thus a

processfield is required.

1.1 cwd: Working directory of the process;

1.2 args: Command and arguments to be executed;

1.3 env: environment variables; - We need a

rootfield specifying root filesystem the container process is running in.mountfield specifies extra filesystem to be mounted. - Hooks: Hooks are actions that can be executed at different stages of container life-cycle. It can be used to customize container’s behavior. NVIDIA Docker uses hooks to inject GPU functionalities into container.

Mechanism of NVIDIA Docker

Architecture

From previous discussion, we can see that the key point is to provide a hook to inject GPU into container.

In general, containerd inside Docker Engine needs to set up hook given the gpu options from DockerCLI. The gpu hooking logic itself is provided by a library called libnvidia-container.

libnvidia-container

This project provides a command line program nvidia-container-cli.

The cli has several commands. list command lists the components required in order to configure a container with GPU support. configure command does the actual GPU set-up inside container.

Following is the example output of list:

$nvidia-container-cli list

#output:

/dev/nvidiactl

/dev/nvidia-uvm

/dev/nvidia-uvm-tools

/dev/nvidia-modeset

/dev/nvidia0

/usr/bin/nvidia-smi

/usr/bin/nvidia-debugdump

/usr/bin/nvidia-persistenced

/usr/bin/nvidia-cuda-mps-control

/usr/bin/nvidia-cuda-mps-server

/usr/lib/x86_64-linux-gnu/libnvidia-ml.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-cfg.so.450.51.06

/usr/lib/x86_64-linux-gnu/libcuda.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-opencl.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-ptxjitcompiler.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-allocator.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-compiler.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-ngx.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-encode.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-opticalflow.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvcuvid.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-eglcore.so.450.51.06

/usr/lib/x86_64-linux-gnu/libnvidia-glcore.so.450.51.06

/usr/lib/x86_64-linux-gnu/libGLX_nvidia.so.450.51.06

/usr/lib/x86_64-linux-gnu/libEGL_nvidia.so.450.51.06

/usr/lib/x86_64-linux-gnu/libGLESv2_nvidia.so.450.51.06

/usr/lib/x86_64-linux-gnu/libGLESv1_CM_nvidia.so.450.51.06

We can see that there are different GPU related components such as nvidia-smi, cuda and gpu driver.

In general, what nvidia-container-cli configure does is to mount above components to enable GPU support of container.

Code Analysis

Whoa, coding time! Let’s read the actual source code that implements nvidia-docker.

Process of enabling GPU support of a container

containerd

The --gpus option is parsed when containerd creates a new container:

//source: https://github.com/containerd/containerd/blob/bc4c3813997554d14449b34d336bca2513e84f96/cmd/ctr/commands/run/run_unix.go#L74

func NewContainer(ctx gocontext.Context, client *containerd.Client, context *cli.Context) (containerd.Container, error) {

...

var (

opts []oci.SpecOpts

cOpts []containerd.NewContainerOpts

spec containerd.NewContainerOpts

)

...

if context.IsSet("gpus") {

opts = append(opts, nvidia.WithGPUs(nvidia.WithDevices(context.Int("gpus")), nvidia.WithAllCapabilities))

}

...

spec = containerd.WithSpec(&s, opts...)

...

return client.NewContainer(ctx, id, cOpts...)

}

--gpus option corresponds to the function WithGPUs. WithGPUs returns a function that sets the NVIDIA hooks.

//source: https://github.com/containerd/containerd/blob/bc4c3813997554d14449b34d336bca2513e84f96/contrib/nvidia/nvidia.go#L68

const NvidiaCLI = "nvidia-container-cli"

...

func WithGPUs(opts ...Opts) oci.SpecOpts {

return func(_ context.Context, _ oci.Client, _ *containers.Container, s *specs.Spec) error {

...

nvidiaPath, err := exec.LookPath(NvidiaCLI)

...

s.Hooks.Prestart = append(s.Hooks.Prestart, specs.Hook{

Path: c.OCIHookPath,

Args: append([]string{

"containerd",

"oci-hook",

"--",

nvidiaPath,

// ensures the required kernel modules are properly loaded

"--load-kmods",

}, c.args()...),

Env: os.Environ(),

})

return nil

}

}

libnvidia-container

The configuration options is passed to nvidia-container-cli as command line options. It looks like:

//source: https://github.com/NVIDIA/libnvidia-container/blob/e6e1c4860d9694608217737c31fc844ef8b9dfd7/src/cli/configure.c#L18

(const struct argp_option[]){

{NULL, 0, NULL, 0, "Options:", -1},

{"pid", 'p', "PID", 0, "Container PID", -1},

{"device", 'd', "ID", 0, "Device UUID(s) or index(es) to isolate", -1},

{"require", 'r', "EXPR", 0, "Check container requirements", -1},

{"ldconfig", 'l', "PATH", 0, "Path to the ldconfig binary", -1},

{"compute", 'c', NULL, 0, "Enable compute capability", -1},

{"utility", 'u', NULL, 0, "Enable utility capability", -1},

{"video", 'v', NULL, 0, "Enable video capability", -1},

{"graphics", 'g', NULL, 0, "Enable graphics capability", -1},

{"display", 'D', NULL, 0, "Enable display capability", -1},

{"ngx", 'n', NULL, 0, "Enable ngx capability", -1},

{"compat32", 0x80, NULL, 0, "Enable 32bits compatibility", -1},

{"mig-config", 0x81, "ID", 0, "Enable configuration of MIG devices", -1},

{"mig-monitor", 0x82, "ID", 0, "Enable monitoring of MIG devices", -1},

{"no-cgroups", 0x83, NULL, 0, "Don't use cgroup enforcement", -1},

{"no-devbind", 0x84, NULL, 0, "Don't bind mount devices", -1},

{0},

}

We can choose what GPU capabilities are needed by container through specifying args.

Then options are configured in configure_command function:

//source: https://github.com/NVIDIA/libnvidia-container/blob/e6e1c4860d9694608217737c31fc844ef8b9dfd7/src/cli/configure.c#L187

int configure_command(const struct context *ctx)

{

...

if (nvc_driver_mount(nvc, cnt, drv) < 0) {

warnx("mount error: %s", nvc_error(nvc));

goto fail;

}

...

}

Options are passed to nvc_driver_mount for actual mount operations. Here is the nvc_driver_mount function:

//source: https://github.com/NVIDIA/libnvidia-container/blob/b2fd9616cd544f780b8d63357e747e7e96281743/src/nvc_mount.c#L709

int

nvc_driver_mount(struct nvc_context *ctx, const struct nvc_container *cnt, const struct nvc_driver_info *info)

{

...

/* Procfs mount */

...

/* Application profile mount */

...

/* Host binary and library mounts */

if (info->bins != NULL && info->nbins > 0) {

if ((tmp = (const char **)mount_files(&ctx->err, ctx->cfg.root, cnt, cnt->cfg.bins_dir, info->bins, info->nbins)) == NULL)

goto fail;

ptr = array_append(ptr, tmp, array_size(tmp));

free(tmp);

}

...

/* IPC mounts */

for (size_t i = 0; i < info->nipcs; ++i) {

if ((*ptr++ = mount_ipc(&ctx->err, ctx->cfg.root, cnt, info->ipcs[i])) == NULL)

goto fail;

}

/* Device mounts */

for (size_t i = 0; i < info->ndevs; ++i) {

if (!(cnt->flags & OPT_NO_DEVBIND)) {

if ((*ptr++ = mount_device(&ctx->err, ctx->cfg.root, cnt, &info->devs[i])) == NULL)

goto fail;

}

}

}

mount_* functions are thin wrappers of Linux system call mount.

See? Nothing fancy, we just mount relevant binaries/devices one by one to container’s filesystem. This is the core logic of NVIDIA Docker.

Summary

Here are the key takeaways of this article:

- NVIDIA Docker provides full GPU support to container by a single

--gpusoption; - NVIDIA Docker serves as a hook of containerd providing customized functionalities(e.g. GPU support) to Docker container.

- NVIDIA Docker’s core library libnvidia-container is implemented by mounting host OS’s NVIDIA GPU driver components inside Docker Container’s filesystem.

Comments